Principles for High Growth Products

This post is from a talk I recently did for our new class of interns, 2018, at Jet.com.

We would not let this … or any of the other big problems in eCommerce stop us and we were determined to ramp up to unprecedented scale faster than anyone ever had done before in this space.

First I would like to talk a little about the drivers for these tech decisions.

Jet.com has always had big ambitious goals, like the one we had in the early days of become an “everything store”. We would take technology and use it in new and interesting ways to save customers money while they shop online.

For example we would isolate all sorts of costs out of the supply chain and pass all of that as savings to customers. We also wanted to become the most transparent way to shop; we would do this by collecting a membership fee and not make a dime of profit on any of the things we sold online. How could it get more transparent than that?

On top of those core ideals about what Jet.com was in those early days, we had this crazy growth plan. VCs only want to see one thing and that is how fast can you take their millions and turn them into billions. That growth plan, of course, would mean millions of customers would need to shop on technology that didn’t yet exit, but had to scale on day one.

We would solve the chicken and egg problem that is present in all eCommerce ventures by using marketing and technology in new and interesting ways. Before Jet could sell brands like Apple it had to contend with the fact that brands don’t want to sell on an unknown marketplace.

We would not let this or any of the other big problems in eCommerce stop us and we were determined to ramp up to unprecedented scale faster than anyone ever had done before in this space.

There were some solid decisions made by the early Jet engineering team on how to deliver on all of this very quickly. These decisions ultimately became guiding principles for a system we believe can scale to 20 billion plus in sales.

The big principle: Event Sourcing

“The thing about good decisions is that they guide you toward other good decisions”

A foundational decision, that would become a guiding principle for our whole architecture, was to use event sourcing as the primary design choice for the core product. In hindsight, this was a risky but brilliant move. I say risky because we would build, arguably, the largest fully event sourced system in the world, at least that we knew of at the time. Event sourcing is also not the most popular paradigm for building distributed systems but boy did it turn out to be a good one.

How does event sourcing actually work?

There are some great posts out there to help you understand this. I will link to a few below, but I would like to give a few basics to anyone who isn’t familiar. Event sourcing relies on two things: an Event Store and an Event Bus to work.

The event store, as the name implies, stores all events in an immutable fashion. That is new events or changes to existing events are appended without modifying the state or the existing data in the data store. Unlike traditional databases when a record is updated the old data is lost, Event Stores, in an event sourced world, simply store things forever by appending the update and never modifying the old record. This turns out to be useful for many reasons. It allows us to build services later on top of this event store that will write these messages out to an event bus. The data can then be consumed from the bus to do new and interesting things or it can be transformed in some meaningful way. This is called a projection and these events can then exist in some other data store that is better suited for certain things like caching or perhaps querying.

This architecture gets powerful, because as events are broadcast onto an event bus and as we pair those up with micro-services, we can then do any number of things with these events. From a Cart Checkout Event we could be sending an order confirmation email, moving the order to be dealt to the physical warehouse floor and, thanks to this single event, have another micro-service push this order into a large data store, HDFS, or any place that allows queries via SQL semantics for analytics. The number of consumers can be nearly unlimited and what they do with all of this data is also up to them. This sort of system scales incredibly well. For us at Jet, the event Bus is Kafka and the Event Store is Equinox, an in-house surface API that stores events in an event sourced manner on Azure.

This article is focused on many tech principles at Jet and so I won’t go into any more detail on Event Sourcing but if you are interested in a more in depth understanding of how we scaled Event Sourcing at Jet, this is a must read. There is also a great recent write-up from the Kickstarter engineering team on Event Sourcing, that shows the basics and confirms that this paradigm is gaining steam, that can be found here.

The next big principle: Asynchronous first

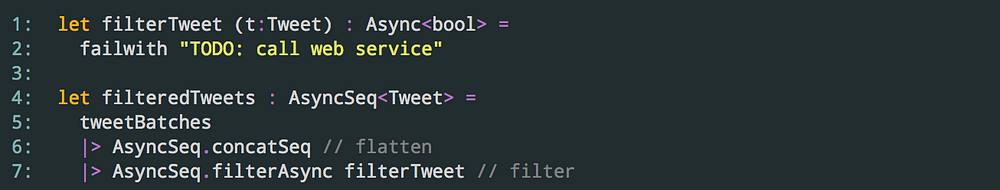

The next big thing that event sourcing lends itself really well to is this notion of treating everything like a stream of data and doing everything in an asynchronous fashion. This is advantageous for so many reasons… but the big reason is computers have many peripherals: Network Cards, Disk Controllers, CPUs, etc. Asynchronous programming allows us to activate all of these things as they are needed and as they become ready. Event sourcing forces the architecture to work well in an asynchronous first world.

One of our lead engineers, Leo Gorodinski, is famous around here for saying;

“the natural state of computers is asynchronous and it is humans that try to force them to act synchronously”.

Indeed asynchronous is the natural state of computers that can do multiple things at once and do them well. We humans on the other hand aren’t great at multi tasking and tend to enjoy doing things in some ordered fashion, one after the other, in a synchronous way. Our programming languages and models have, unfortunately, evolved to do things the human way but there are tremendous scale and efficiency advantages to be had if we don’t fight this paradigm and we treat it like a first-class citizen, which is exactly what we do at Jet.

When we write messages to a bus in the event sourcing world and then read those messages this is almost always done asynchronously. Similarly, when we process messages we can do it asynchronously as well because these systems are designed to work this way by default given that we deliberately default to asynchronous first.

If you are interested in a much deeper understanding of how Async works in F# and how we use it extensively at Jet, an excellent detailed post by my colleague Leo can be found here.

The third major decision: Micro services

Going with a Micro Service architecture was not done because it was the trend. We knew well that micro-services can bring a great deal of complexity to a system. We deliberately went in this direction because micro-services actually lend themselves incredibly well to event sourcing. In fact, I would say these two architectural paradigms are very much made for each other.

For example, a small service that does one thing like read a single event type and does something interesting with it, is perfect in this world.

To use the Cart Checkout example I touched on earlier, say we have a single service that reads cart-checkout events and then sends an order confirmation email to users.

This is a single micro service that does one thing really well. For a developer that needs to make a change to this, understanding each individual service and their running code is trivial.

Then, because these events sit on an event bus, this email micro service can be scaled horizontally by running more instances of it, basically to whatever scale we like. We can just add more instances of it if the single instance is not enough to keep up with the number of carts being checked out and broadcasted onto the event bus.

As an example of the above, a few great engineers on my team were able to do exactly this (add multiple instances of a micro service) to bring our transactional email system from an SLA of hours on Black Friday peak throughput to milliseconds. Something possible only due to the design decisions and principles mentioned above.

The fourth principle: Functional first and stateless

There is a big push today toward functional programming in our industry. People are waking up to the power of these programming paradigms but even more so I think that push is very much due to the rise of the public cloud.

Some of the key characteristics and big benefits of functional languages is that they tend to be immutable by default and immutability, it turns out, is a very desirable property if you care about referential transparency. That is basically the guarantee that your functions will do the same thing over and over again.

Imperative languages can’t make this guarantee because depending on the state of objects they may return vastly different answers. Referential integrity makes guarantees about your code on the cloud, a place where few are willing to make any sort of guarantees.

We landed on F# at Jet and this language has a lot of really nice features, the biggest feature is conciseness. Generally, F# is a higher order programming language that allows you to do in 50 lines of code what may, in some cases, take 500 lines of code in Java or C#. Most importantly F#, thanks to its preference toward immutability and immutable data structures, has allowed us to build stateless micro-services that scale really well in our event sourced world.

When all of these tech decisions and principles come together, like the event sourcing, functional programming, being asynchronous first, and tiny micro-services we have a system at Jet that is storing events in an immutable fashion, transforming them, and passing messages down, all the while never holding any state and scaling really well. This combination is incredibly powerful and it allows us to squeeze every ounce of juice out of our underlying machines. It gave us the ability to recklessly parallelize. If you don’t have state you don’t have race conditions, at least not in your code.

The fifth important factor: Public clouds

Jet had to be on the public cloud because we wanted to compete and grow at a blistering pace. We wanted to focus on innovating on the eCommerce space, not on servers and infrastructure. At the time, when Jet was just getting started, the public cloud wasn’t such an obvious choice.

When Jet started, the number one provider, by a very wide lead for these sorts of services was Amazon AWS. However, it did not make much sense to pay a potential competitor for cloud services. The decision our CTO made to use Azure lead to the decision to use .NET, since most of the SDKs on Azure at the time really only had support for .NET.

All of these technologies have evolved so much and now with .NET core, Microsoft has become a real contender in the open source space. This has worked out incredibly well for us.

In 2018, it is now obvious to startups that you should build things on the public cloud and focus on the business problem and the product you are trying to build. However, many of these decisions back in those days were fairly risky and not as obvious as they are now.

A quick summary

The parallels of our technology choices at Jet are easy to draw with hindsight, but it was these core decisions and principles in the beginning that led us down this path.

The parallels between our choice of programming languages, the architecture of the platform and other guiding principles can be drawn as follows:

The key to event sourcing is immutable data which lead us to choose a functional language (F#) that defaults to immutable data.

Event driven micro services are modeled as:input → do something → output

Functions with no side effects can be modeled as :input → do something → output

Events and services are composable → functions are composable. Writing things to an event bus is inherently asynchronous and that forced us to think in an asynchronous fashion.

How solid were these ideas and principles?

Did they stand the test of time?

It is telling that we got to 10 million plus customers without rewriting any of our core platform, outside of upgrading and adding new things as needed. It is also telling that we are confident we can continue to scale this to even a hundred million customers.

Perhaps some of the most important principles about Jet have little to do with our technology, but instead with our culture. Jet’s culture of being bold, trying things, and continuous learning and growth, is incredibly contagious. When you use and rely on so many new technologies it is impossible to get people in the door who know all of them. What you get, instead, are folks that are hungry to learn and grow.

As we push forward, it is good to look back at the foundational decisions and guiding principles that were so important to the success of Jet Tech. This of course is so that these principles will continue to influence and guide our future thoughts.

You can follow me on Twitter where I started posting regularly about this and many other tech topics.

Acknowledgements

Thanks to Chris Shei, Drew Schaeffer, Leo Gorodinski and many others for comments, edits, suggestions.